by futurist Richard Worzel, C.F.A.

If you listen to all the buzz surrounding artificial intelligence, AI is both a promise and a threat. It’s a humble servant and a deadly competitor. It’s going to save the world, and destroy it. It’s not quite all things to all people, but it’s a hell of a lot to an ever-widening circle of people. And although there is a lot of hype surrounding AI, much of it will turn out to be true, and we don’t yet know where it will lead us, any more than we knew where the emergence of the World Wide Web would lead us.

In an earlier blog, I described AI as the Swiss Army knife of technology, meaning that it would be used anywhere and everywhere. One of my colleagues commented that it’s not a Swiss Army knife, but more of a Swiss Army machine shop. Both statements are true, and point to where we are going.

AI is being used in an incredibly wide range of applications, as indicated by the seven categories of examples below, but its uses are going to multiply, then multiply again. And that represents a threat to your organization: if you’re not using AI, you’re being left behind.

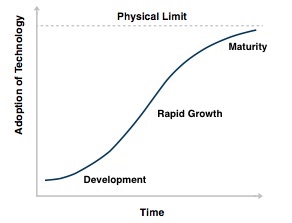

The chart below shows the classic S-curve of development of a new technology:

There are two things to note about AI and the S-curve. First, we are in the Rapid Growth phase, so if you are late in adopting AI, your competitors will be accelerating away from you, and the distance will keep increasing until the technology reaches the Maturity stage.

Which leads to the second observation: Is there a physical or upper limit to intelligence? If so, it’s beyond anything we know, which means that the upper limit of AI may be very high, indeed.

Having made these comments, let’s turn now to a few of the many areas where AI is being applied, and see if we can tease out where these developments may lead as they mature.

Beating Us at Our Own Games…

In 1997, IBM’s Deep Blue, beat Gary Kasparov, the human chess champion. In 2011, IBM’s Watson AI beat the two greatest human champions of the TV games show, Jeopardy!In 2016, Google’s AlphaGo program beat Lee Sedol, the human Go champion. (Go is thought to be the most complex of all board games.) And in July of 2019, an AI called Libratus, which is a joint creation of Carnegie Mellon University and Facebook researchers, beat 15 of the top human poker players over the course of more than 5,000 hands of No Limit, Texas Hold ‘Em poker.

Each of these games is of increasing complexity and ambiguity, and the computer/AI winners have demonstrated the steady improvement of AI – and also one of its biggest problems.

When Kasparov was beaten by Deep Blue, he commented that the computer beat him through brute force – essentially by considering many more possibilities to search for a good play than a human could do in the same amount of time. Aside from that, Kasparov noted, Deep Blue was “no more intelligent than an alarm clock”. His point is well made: you would not expect Deep Blue to be able to do anything other than play chess. It couldn’t, for example, play poker, or write a love letter.

This has two implications. First, the definition of what AI is keeps changing. In fact, there is no widely accepted definition of what is, and isn’t AI, and the basket of technologies and techniques that are considered to constitute AI keeps changing.

And part of the problem with that is that every time a computer has achieved something that was previously considered to be something only a human could do, people moved the goalposts, in effect saying, “Well, that’s not really Artificial Intelligence, that’s just a clever algorithm.” Worse, now that AI is glamorous and popular, people are pointing to any technology they’re trying to promote and calling it AI. This gets confusing.

The second implication is that AI is getting better and better at operating in poorly defined realms. Chess is a tightly defined game, with only a (relatively) small number of possible moves at any juncture. The game of Go has many more possible moves, but the rules are still defined in black and white (no pun intended). But No Limit, Texas Hold ‘Em poker against multiple human players, each of which has their own foibles, is a vastly more complicated, and less well-defined, game space than either chess or Go.

What this points towards is that AI will eventually tackle messy, complex situations, for example in developing strategy and tactics for warfare. I would have added stock market trading, except smart computer programs and computer systems have been used for stock trading for decades – and are getting progressively better and better.

So, AI is progressively encroaching on the areas that are currently or have previously been seen as uniquely human.

Assistants to Do the Drudgery…and Potentially Much More

Moxi[2] is a robot built by Diligent Robots of Austin, Texas to assist nurses with the 30% of their workload that doesn’t involve interacting with patients, and is being used in a handful of Texas hospitals (so far). For instance, when a room is flagged as being newly vacant, Moxi goes to a supply closet and gets the supplies necessary to make up the room for the next patient, depositing them in the room for a nurse. This relieves the nurse of having to remember to this, and from having to retrieve the supplies themselves.

Oddly, though, even though Moxi was designed to be unobtrusive, and do background work, patients and hospital staff seem to enjoying seeing the little robot. Accordingly, its makers have re-programmed it to spend about 5 minutes an hour waving and interacting with people.

The implications here are that in the short-term, robots will act as assistants to humans, doing things that don’t require human judgment or substantive interaction with humans. The kinds of applications where this will happen range from the fetch-and-carry kinds of things that Moxi does, to intelligent agents working with accountants and auditors, performing routine analyses on contracts, leases, and checking records in order to relieve human accountants and auditors from having to perform this kind of drudgery. Or, it includes AIs that help you identify the scientific research papers that are most relevant to your own work from among the estimated 2.5 million papers published every year[3].

And the fields where AI and robots act as human assistants will continue to expand as they become more capable, and as humans become more used to having their help, and think up new ways they can be helpful.

But Moxi’s example also points to the potential for a greater acceptance of computer intelligences as long as they are engineered to be friendly and/or non-threatening. Apparently, humans are willing to be served by and work with robots if the task and settings are right.

Liar Detectors

Computers are very good at assessing problems that have lots of variables, and involve vast amounts of data. One class of such problems in understanding what’s going on around and inside human beings.

For instance, AIs are getting steadily better at interpreting signals of possible health threats. This can range from reading X-rays and CAT scans, to assessing the potential behavior and physiological indicators of a heart attack. Coming back to the AI-as-assistant theme, AIs are getting very good at reading images, and looking for tumors. Yet, at least to date, this seems to be most useful when an AI works in conjunction with a doctor in performing a diagnosis. As one commentator said, it’s not that AIs will replace doctors in performing diagnoses; it’s that doctors who use AIs will replace doctors that don’t. In other words, humans and computers are more effective working together than either on their own.

But the potential for computers to read human indicators goes beyond health care. AIs are starting to be able to read body language, and the transient, subconscious, micro-expressions that indicate whether a human is telling the truth or not. This is not, and will never be, fool proof. If someone believes a lie, then it’s not possible to tell that they are lying – and there are certain kinds of psychopaths that believe their own lies. But this is a relatively small percentage of the population, which means that for most people, computers will be able to tell, by your reactions, many of them subconscious and beyond your control, whether you are lying or not, or assessing your state of mind.

This may lead to positive outcomes, such as being able to walk through airport security without being stopped or questioned, because you and your history have been recognized by a computer, and your body language reveals that you are not unusually anxious.

But it may also lead to a situation where police may be able to question a suspect, perhaps with yes-or-no questions, and learn a great deal from having a computer assess the suspect’s reactions, even if the suspect doesn’t say a word. This might violate legal protections preventing “unreasonable search and seizure”, but it will take some novel case law to outline what is, and isn’t, acceptable.

Over time, though, such truth-detectors may become widely available, say as a smartphone app, so that everyone will be able to tell whether you are telling the truth – including parents grilling a teenager over last night’s events, or one partner quizzing another about their fidelity, or whether those pants really do make them look fat or not. In time, this might lead to a new society, where people tell the unvarnished truth – because to do otherwise is automatically incriminating.

I see this kind of development as having very far-reaching social and political implications.

Perfect Vision Glasses & More

Over time this might develop into contact lenses, but for the moment, let’s consider apparently normal over-the-nose glasses.

Imagine that you have glasses with tiny video cameras focused on your eyes that read your eyes and the muscles surrounding them to detect eyestrain – then reshape the lenses of your glasses to minimize eyestrain, and give you the best possible vision for whatever you’re looking at. And as you shift your focus, say from a screen to someone in the distance, the focus changes instantly to again minimize eye strain.[4]

Initially, this might take a fraction of a second for such smart glasses to adjust, but as the controlling AI got used to what you do, and how you respond to things at different distances, it would be able to anticipate your needs, and adjust faster than you could notice.

The result will be glasses that always adjust to give you the best, most comfortable vision possible.

The same could be true of any tool you use, from an electric screwdriver, that gauges just how hard you want to push and how much resistance the screw is encountering, to a pillow or mattress that adjusts its shape and temperature to maximize your comfort.

Extending this idea further, imagine shoes that adjust to your gait and walking speed, plus the terrain, to minimize the stress on your feet, or an exoskeleton (which is a wearable robot) that helps you walk, move, and lift things as you get older, or if you are in a physically demanding job. Or consider a winter coat that warms and cools, according to how different parts of your body feel, in order to avoid cold spots.

Or consider glasses that recognize people you see, and whisper their names in your ear at a time when you’re finding it difficult to remember things.[5]Or a robot that someone who is disabled can command by thinking what they want, and having the robot do it for them.[6]

Augmenting our native abilities to increase the range of things we can do, or to compensate for decreasing abilities as we change, will become a big part of what AIs, computers, and robots do for us.

Your Personal AI Sous-Chef

IBM’s Watson AI[7]is already being used as a personal sous-chef, both in terms of suggesting recipes and meals. It will also start with whatever you have in your fridge or on hand, and create a recipe for you. And it will suggest combinations, based on food chemistry, that you might never consider.

Not every combination, nor every recipe, is a winner, partly because Watson, being a computer, doesn’t actually taste anything. But in terms of assisting a human chef, and making useful suggestions, it can be very handy.

And this kind of suggestion, based on massive amounts of data and the experience of other people, can apply in all kinds of areas of human activity: coaching sports, do-it-yourself jobs, painting (both house- and art), exercise, composing music[8], and more, and can lead to a greater level of enjoyment and better results.

The same applies in working environments – but we covered that earlier.

Becoming Part of a Show, Along with Everyone Else…

The BBC recently created an audio drama to be played through smart speakers, like Amazon Echo or Google Home, that allows the listener to participate in the storyline, mostly by answering questions (a kind of choose-your-own-story, but with a computer)[9]. Since each listener could choose different alternatives, you could have as many stories as listeners.

And this could eventually lead to similar results with video productions, especially as photo-realism emerges. In effect, a viewer could become a participant in a storyline, interacting with the computer-animated characters on the screen, and helping to shape the story. Since each character could be an AI programmed to act according to a character’s nature, it would be possible to have each character act differently to each viewer, according to what the viewer chose.

In turn, this could lead to situations where viewers who were also gifted storytellers could create storylines that they could license for others to view, splitting the revenue with the show’s producers. And since there could be lots of different storylines, this could be lucrative both to the producers, and to the story-telling viewers. Imagine, for instance, what might have happened if this kind of system were available for the ending of Game of Thrones…

Sex Robots with a Yen for Conversation…

There is no question that there will be lots of sex robots, and that they will sell well. But how about sex robots that have the ability to talk to you in bed after sex? This is a logical extension of a number of trends.

There are already sex dolls, which have been around for decades. Then there were sex robots, which could move and some could make what amounted to pre-recorded comments.

More recently there are AIs that are available to become your “friends” that you can talk to over the phone, largely for people who are lonely, but also for people who want a virtual girl- or boy-friend with whom they can chat.

And the next step after that is to have sex robots with which humans can have sex, and that can also hold a conversation before, during, or afterwards. (I doubt if such robots are likely to smoke after sex, though.)

And although all of this sounds bizarre, there are applications that actually sound reasonable. For instance, one report is of a lonely widower whose wife of many years died of cancer. He bought a customized doll that effectively serves as the companion that he needed for company and (limited) conversation.

But the greater implication is that AIs will fill in for humans in situations where humans aren’t available, or where the patience of a machine is called for. Some of this we have already experienced, as with maddening “customer service” bots that talk to us when we call, for instance, our telecommunications provider. From the company’s point of view, such bots can cover the majority of issues that are likely to crop up, and do so much more cheaply than humans, without the need to pay overtime, or provide benefits, then pass the difficult cases over to a human.

Using AIs for 911 calls might seem a stretch, but anyone who has dialed 911 in a big city and gotten a “your call is very important to us…” type of reply can understand why it might be better to talk to a bot that has at least some capacity to act than to wait on hold for a human.

The ability to have AIs as friends may prove to be very helpful for people with particular needs. For instance, some people on the autism spectrum can find it easier to relate to an AI bot than a human, and the AI doesn’t get bored, angry, or upset. People who are lonely or isolated for a variety of reasons might find it better to be able to talk to a sympathetic AI than to no one at all.

AIs will be used for screening in health care by taking initial details, then using the results of health care databases to determine what the reasonable next step would be, whether it’s two aspirin, or an appointment with an expensive specialist physician.

All told, the applications for AIs that can carry out a conversation with human beings will be widespread, much wider than I’ve sketched here, and will become commonplace in the near future. And, as AIs get better and better at such conversations, the applications that will unfold for them will expand as well.

Just don’t ask if they’ll still respect you in the morning, because they’ll likely lie to you and say “yes”.

Where We Are Heading with AI

AIs are not creative, but can substitute computation, analysis, depth of data, and experimentation, to produce results that humans can then use as springboards to make themselves more effective and creative. This is being done in a wide range of fields, and with sometimes startling results.

So, AI is the Swiss Army knife of technology, and will be used anywhere and everywhere. But it’s how humans choose to use AI that will lead to unexpected results. This is often true of novel technologies. Remember that the Internet was originally devised to be a robust communications network that could survive a nuclear war, enabling America’s military to coordinate and respond more effectively. No one imagined that it would become a means for selling Victoria’s Secret lingerie, or dog food, or looking up solutions to DIY problems, or the millions of other things we now use the Internet to do.

Likewise, AI is going to be used, stretched, expanded, abused, and converted to applications that seem wholly unlikely now.

From a business perspective, this means that organizations need to be figuring out how they can use AI in their operations, and to supercharge their employee’s efforts. Yet, according to a recent study done by Boston Consulting and the MIT Sloan School of Business, “61% of all organizations interviewed see developing an AI strategy as urgent, yet only 50% have one done today”[10].

If you are not looking into AI, you are running a significant risk of being left behind by your competitors, even though AI is difficult and potentially expensive to establish.

What you can’t afford to do is ignore it.

© Copyright, IF Research, July 2019.

[3] http://blog.cdnsciencepub.com/21st-century-science-overload/

[5]https://www.orcam.com/en/article/facial-recognition-glasses/

[7]https://www.ibm.com/blogs/watson/2016/01/chef-watson-has-arrived-and-is-ready-to-help-you-cook/

[8]https://fredrikstenbeck.com/watson-beat/or https://medium.com/@anna_seg/the-watson-beat-d7497406a202

[9]https://www.bernardmarr.com/default.asp?contentID=1283